Hi! I’m Brad

Last week, Meta did a very surprising PR stunt where they showed the focus of what optical/display technology progresses need to happen so we can have Virtual Reality become closer visually to actual reality. The details they let their engineers go into baffled my mind. I spent nearly a year reading white papers and trying to understand some of these future principles. Seeing them release a video about it made me feel like I could have just relaxed and waited for this onslaught of info to exist in an easy-to-watch format.

Anyway, beyond my shock: there were some great positives that came from this information being publicly released.

- People see that Varifocal technologies are still being pursued

- The last time Meta has talked about lenses that could adjust to multiple focal points based on eye tracking was in 2019. People had the impression that they were not pursuing this technology anymore since they were silent about it. In my research: The opposite was true. They even acquired a company known as ImagineOptix who was focusing their R&D and manufacturing to develop the thin LC films to enable this in mass volumes (Valve was also heavily interested in this company)

- The last time Meta has talked about lenses that could adjust to multiple focal points based on eye tracking was in 2019. People had the impression that they were not pursuing this technology anymore since they were silent about it. In my research: The opposite was true. They even acquired a company known as ImagineOptix who was focusing their R&D and manufacturing to develop the thin LC films to enable this in mass volumes (Valve was also heavily interested in this company)

- High brightness displays (up to 20,000 nits) can not harm your eyes

- On my Youtube channel, I have talked quite a bit about how we need displays reaching around a maximum brightness rating of ~10,000 nits. Usually the first response I got from users was: “won’t that blind us?” And I would usually have to go into the same spiel that it would not. In the longform showing of the Starburst HDR prototype: they were giving brightness examples of every day indoor environments. And the realization to many was: “oh, most things in our reality are much brighter than what displays currently put out. In this article, there will be way more to this discussion of brightness.

- On my Youtube channel, I have talked quite a bit about how we need displays reaching around a maximum brightness rating of ~10,000 nits. Usually the first response I got from users was: “won’t that blind us?” And I would usually have to go into the same spiel that it would not. In the longform showing of the Starburst HDR prototype: they were giving brightness examples of every day indoor environments. And the realization to many was: “oh, most things in our reality are much brighter than what displays currently put out. In this article, there will be way more to this discussion of brightness.

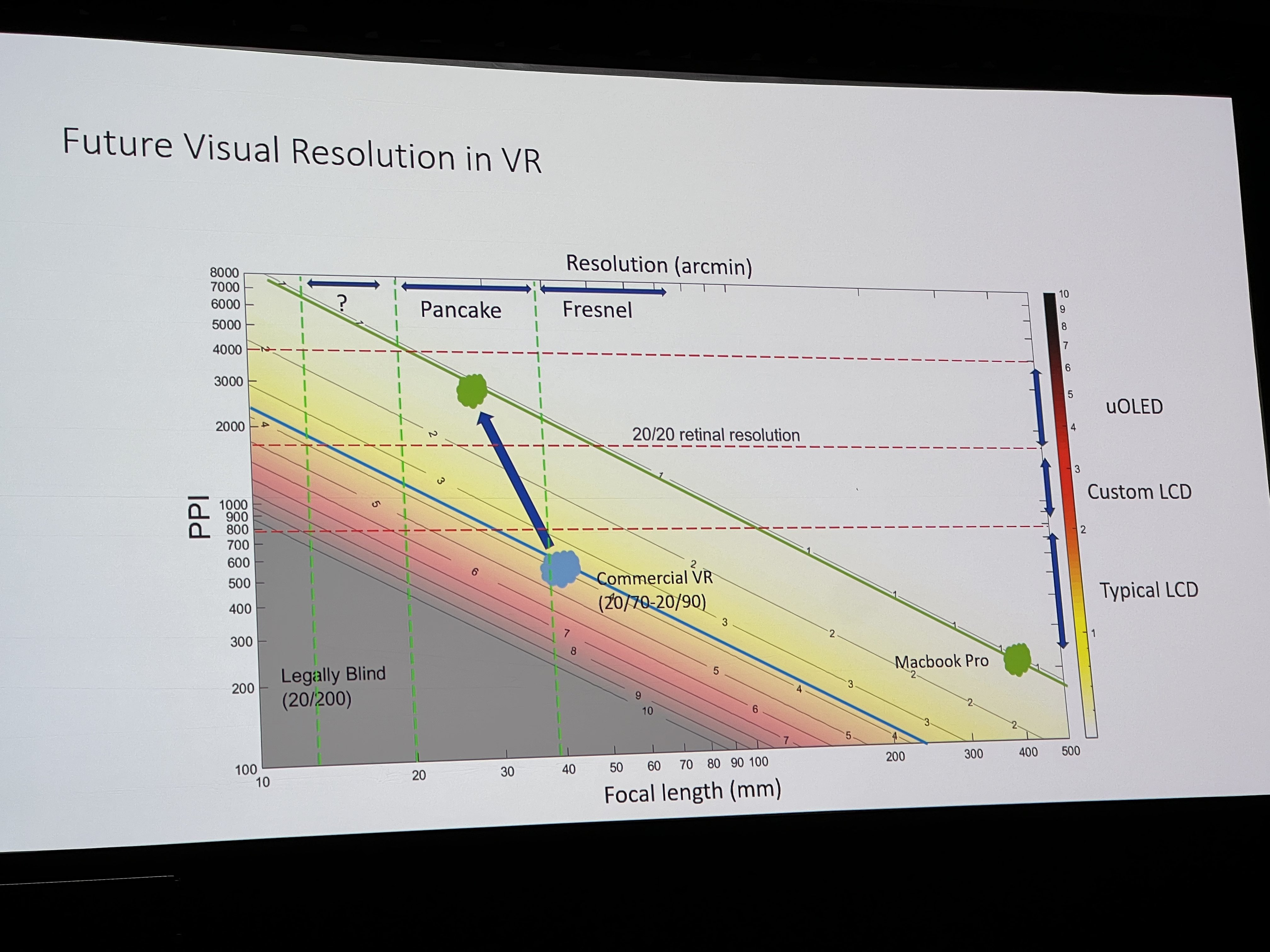

- Resolution increases are the easiest thing to do in Near-eye Displays

- Thinner form factors are coming. And Pancake-type optics are the reason

- No matter what you do: Optics will give off some sort of distortion. But eye tracking can alleviate that

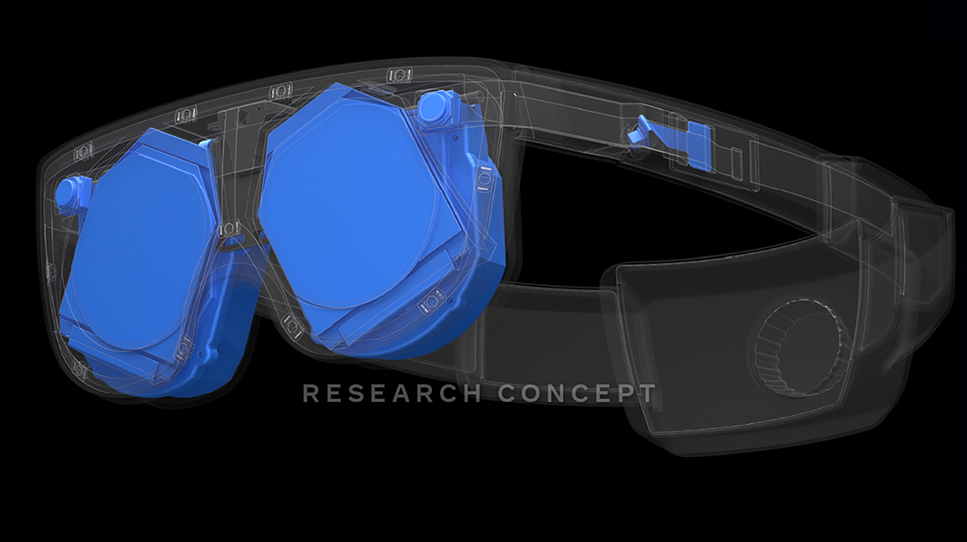

In this article, I don’t want to hover much on the four individual prototypes too much. I think it is fair to note that each of these demonstrations are the extreme examples of every visual “test.” I want to focus more on the Mirror Lake concept. Which is what Meta is setting out “long-term” to create. It takes the best of these visual extremities and packs them into a small device.

First I want to go into commenting on brightness again. Getting higher brightness in displays is very important in the XR industry. You might hear stories about how MicroLEDs can push to insane levels of brightness. And since those are using inorganic materials: they won’t have issues with lifetime in the process. This is why they are the “Dream” display. However they have their own problems right now. And I don’t expect those to be solved enough to be scaled to VR devices for many years still.

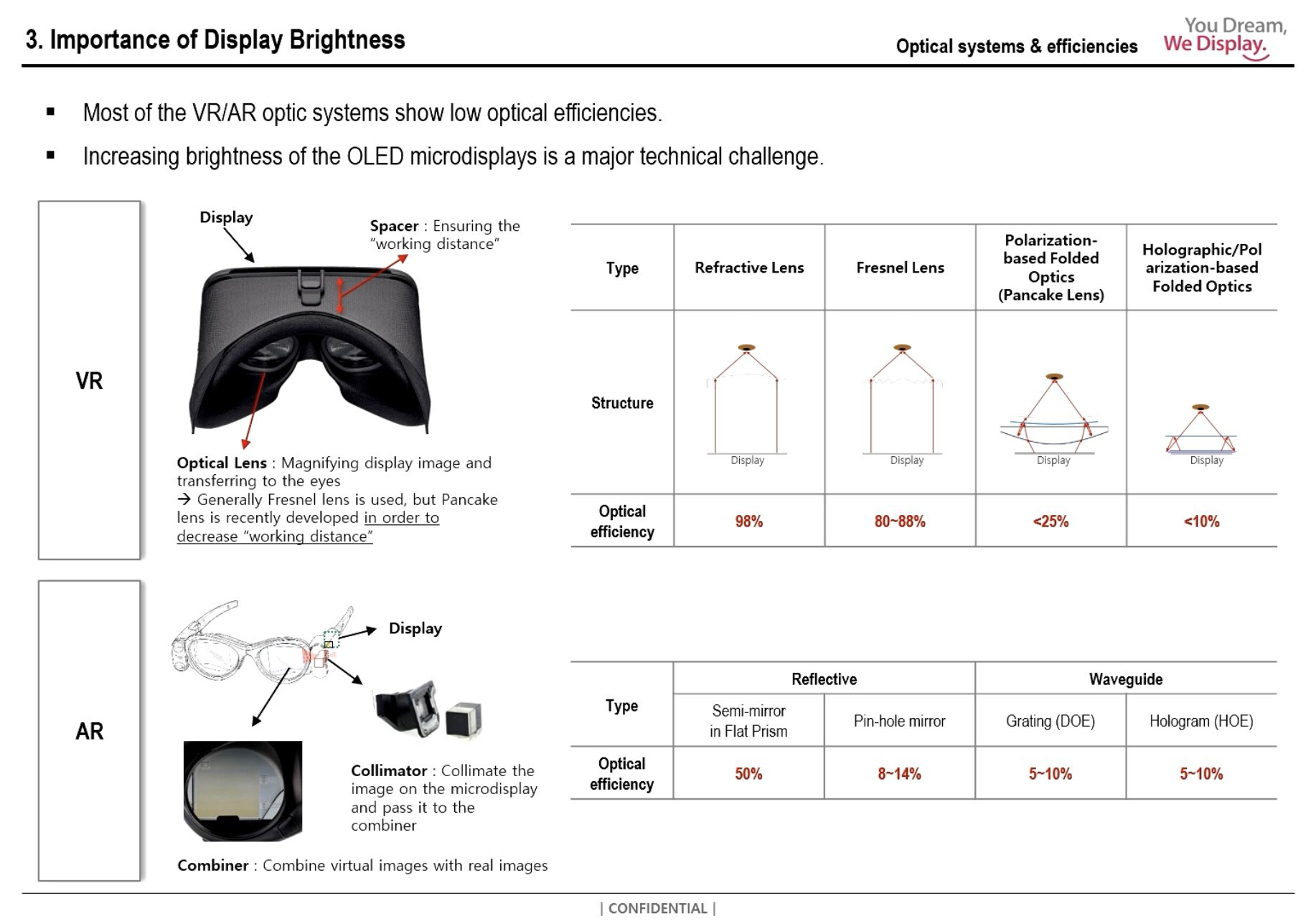

Going back to the brightness issue: large companies like Meta don’t necessarily want efficient high-brightness displays to recreate the Starburst prototype (Except Sony who achieve HDR because they are sticking with Fresnel Optics). They mostly want it for the purpose of thinner Optics!

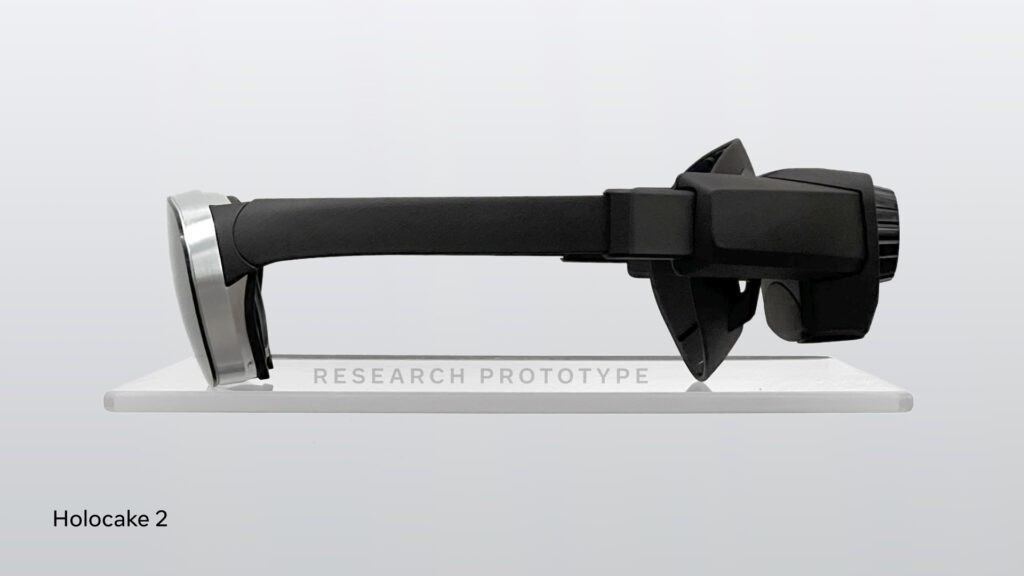

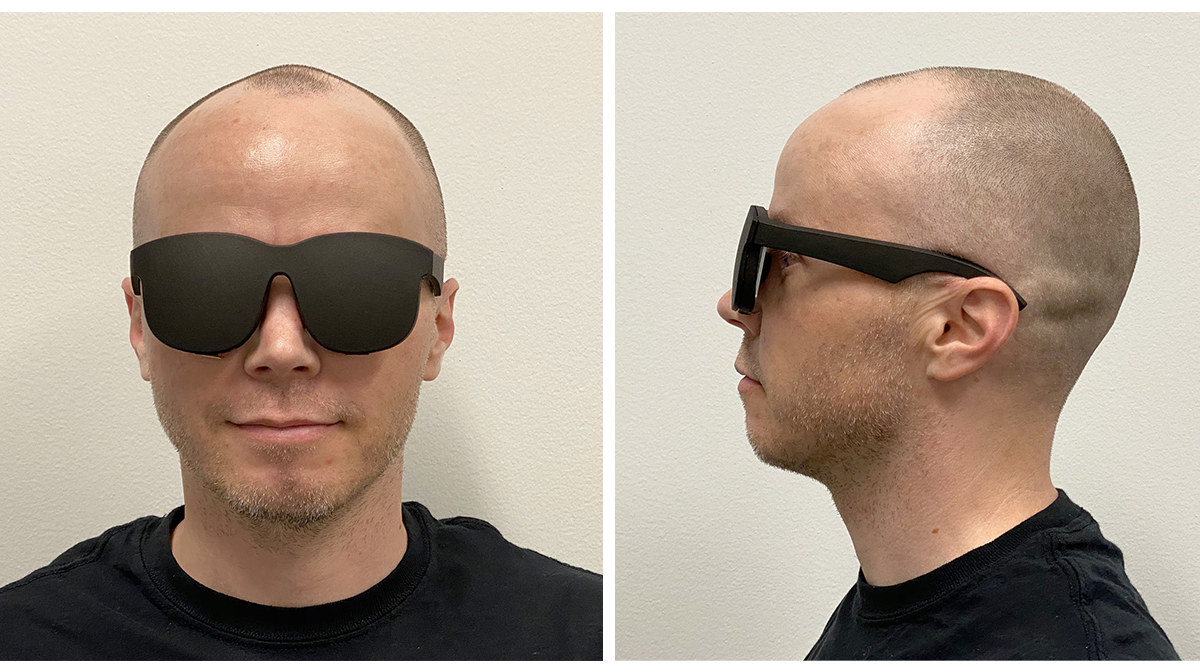

Above is one of the four prototypes that Meta showed off. It is the Holocake 2 HMD. I have talked a lot about how pancake lenses would allow for a HMD to become more thin and compact. Holocake replaces one of the bulky glass/plastic elements inside with a stack of thin holographic films to do effectively the same function.

The benefit of doing this not only lets the components behind the display come closer to the user’s face (which gives a better center of gravity), but by replacing something thick with something thin: the weight is reduced a bit. It sounds like the perfect lens system. I have also expected for a while that you could add solid state LC varifocal elements to a lens stack like this. And the Meta engineer that demoing one of the prototypes to Norm from Tested confirmed this. He also mentioned they could theoretically slap a “varifocal attachment on the outside”, which I found incredibly interesting.

The question that I have noticed people asking themselves after seeing Holocake 2 is “why is Meta saying this system is far away?” There are a couple main reasons I want to dive into.

Going back to brightness: Large companies like Meta do not want high brightness displays to reach a consumerized copy of “Starburst.” They want it so they can use inefficient optics. AND to run these displays at a 10% duty cycle. With both of these implementations, 20,000 will turn into 200 before it reaches the eye.

Holocake 2 is a lens system that I imagine multiple OEMs are looking into for next generation HMDs. Most VR headsets in the market, including the Oculus Quest 2, still use Fresnel lenses. They are cheap to make and don’t require displays that are different from an everyday smartphone. The issue lies within this, however. While the Quest 2 has evolved the industry to sell greater than 10 million units: my sources tell me constantly that retention is still an issue. Some would say it’s due to software content, and while that is probably also true: I think hardware/comfort is a big reason. Especially during the hot summer seasons.

As someone who has tried a variety of prototype HMDs employing pancake-type optics (of varying qualities): I have come to at least agree that current HMDs are not good enough for longtime use. Anyone that tries the next generation coming this year and next will understand this sentiment. Ming-Chi Kuo has said for the past year that both Apple and Meta are buying pancake optics in bulk for their high-end HMDs. More specifically: Meta is purchasing 2P Pancake lenses at ~$15 per stack, and Apple is purchasing 3P Pancake lenses at ~$25 per stack.

I have always struggled to understand what the difference will be between the lenses that Meta is ordering compared to apple. An extra piece in a stack that costs 10 dollars more (per eye) is notable. I can only speculate here, and I invite others to do so in the comments. Or let me know via email.

Meta showed off a ton in these videos. And talked even more. Yet at the same time, I cannot help but feel they are obviously not showing their full hand. The reasons come off from industry source comments, public Meta showcases at industry events, and what I understand about lasers.

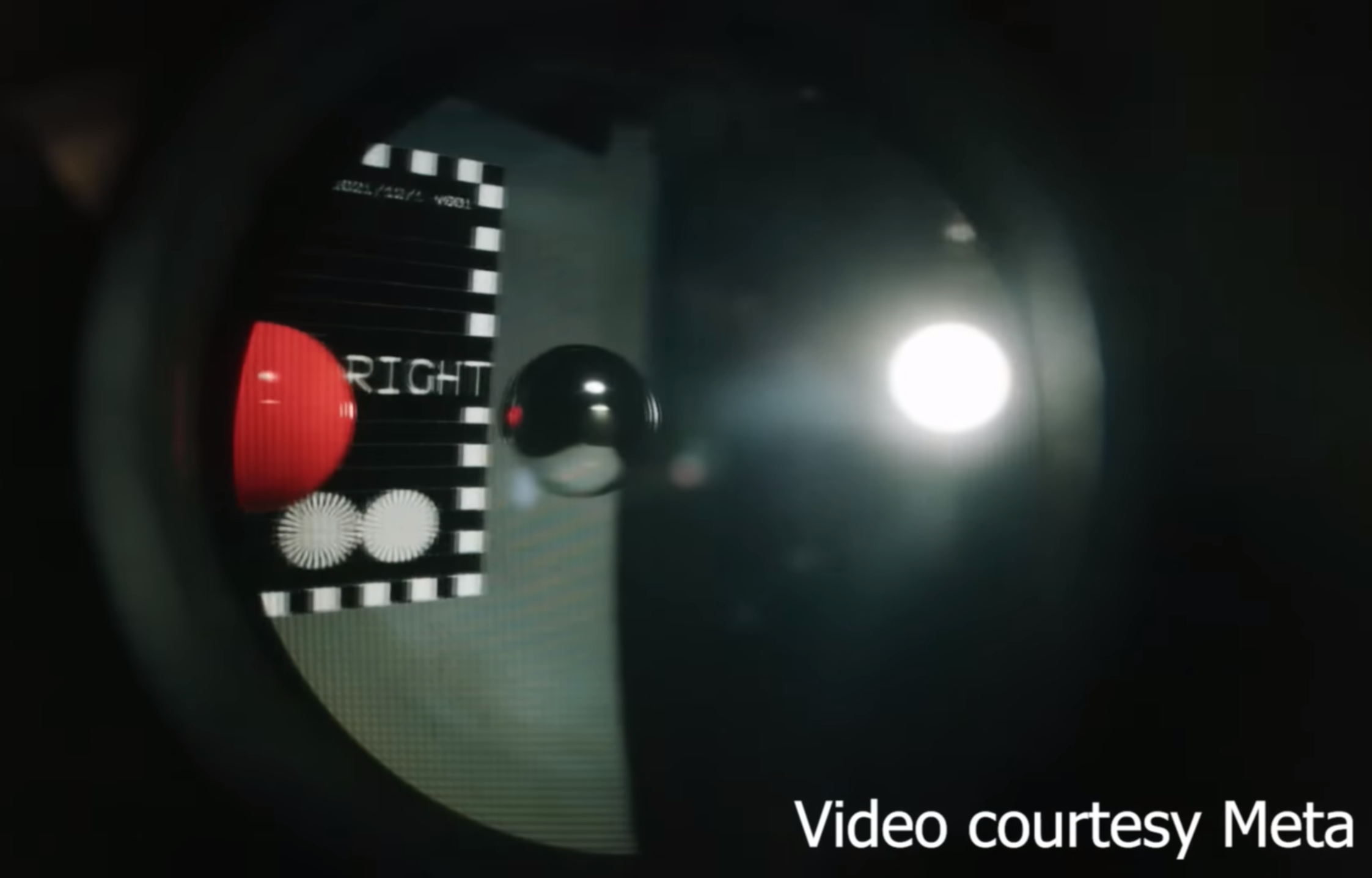

Every Holocake prototype Meta has shown off in public research has employed the use of lasers. The way it works is they would take an off-the-shelf LCD: and replace the LED backlight with one that integrates RGB lasers. Anything involving lasers sounds incredibly futuristic, but the main reason they do it is because these thin holographic films enjoy what’s called “collimated light sources.” Most LEDs, including OLEDs, give off a type of light known as “Lambertian.” Instead of as single ray, they tend to emit all of their light into a super wide cone. This can cause scatter and huge inefficiencies in display emission.

Facebook Reality Labs seemingly wanted to go the far-extreme and use lasers for their prototypes. Lasers are the opposite of LEDs: they are fully collimated.. at a huge cost. First of all, laser light can be very expensive to employ. They have a feature known as “speckle” which gives a very distracting visual effect. And if things go VERY wrong, they can potentially dangerous for your eyes.

In my opinion, and I could be wrong, I do not expect lasers to take over traditional LED light sources for a while in systems such as these. So now you have to figure out: “How do we get holocake without lasers?” I have a few ideas from what I am seeing in display industry trends.

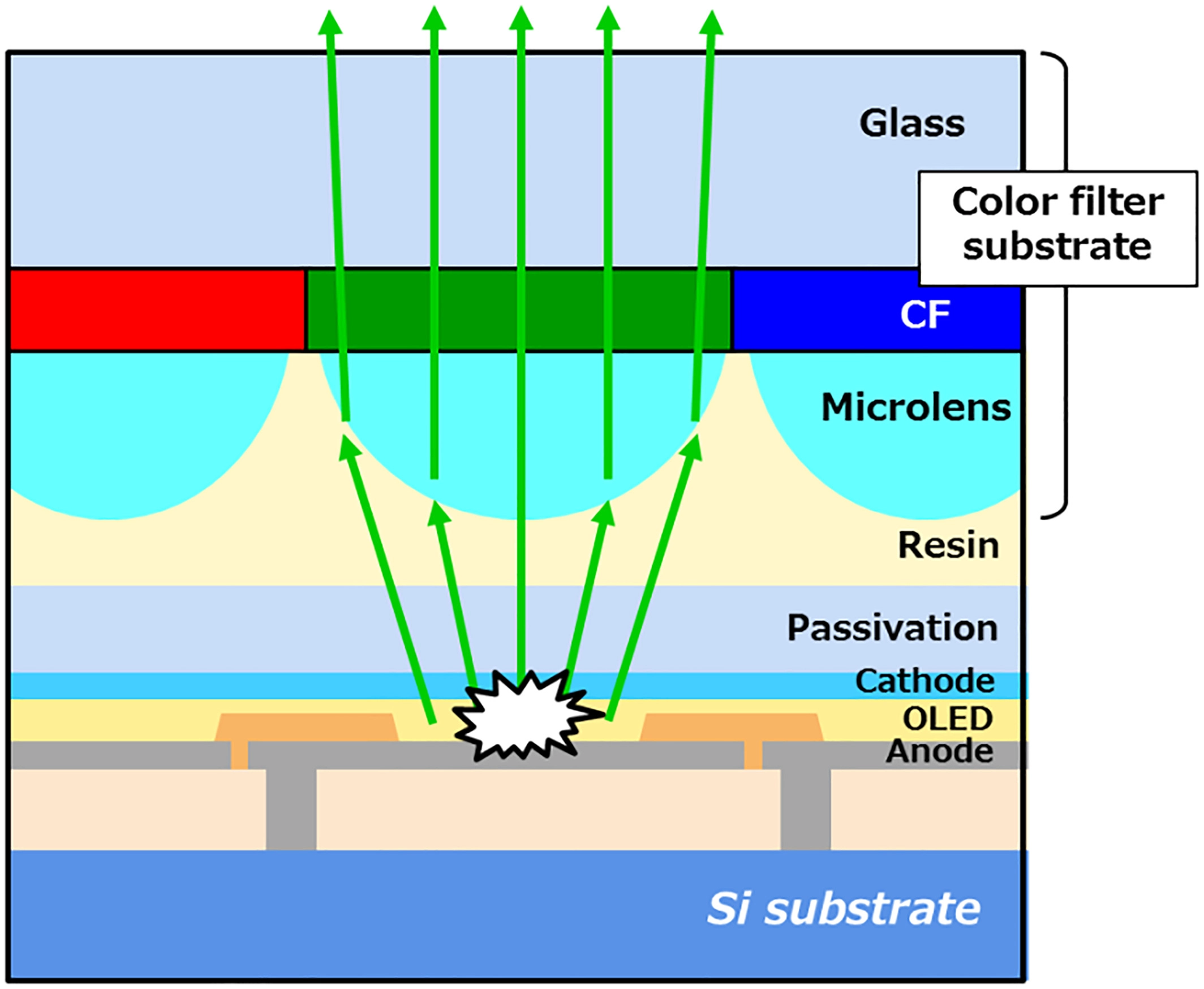

MicroLens arrays have become a very popular topic in the display industry. The idea is to add a layer of small lenses to a display stack that not only collimates the Lambertian light, but can also improve brightness, lifetime, and efficiency.

In 2019, Sony won the coveted “Best of Displayweek” on a paper about how they made Micro-OLED displays that added a microlens array. This achievement showed great results in not only collimating the light in a single stack of WOLED, but increased the brightness from 1600 to 5000 nits with no added power consumption. This is very impressive. Other OLED Microdisplay players in the industry such as eMagin or APS Research believe that if you removed the inefficient color filters on top and directly patterned the RGB onto silicon wafers: You could start with ~10,000 nits single stack. Adding Sony MicroLens arrays to a display like this would be potentially amazing for brightness. And possibly allow Holocake optics to become usable.

There is one more tiny addition you could add to a display stack to make it even more efficient and make the light cone angle more acute. Microcavities are done early in the deposition process. And they are exactly as they sound: make the light emitter as thin and angled as possible to guide the light where you want. Both MicroLens Arrays and Microcavities were never too useful for other display use-cases because they sacrificed the viewing angle. But for Near Eye devices: that is not a worry.

So who will be able to use Holocake lenses first? Well I think there is more issues to solve than collimation and light efficiency. Manufacturing these technologies at scale has been another thing that OEMs have been trying to solve. In fact, even Meta themselves were at SID Displayweek this year with a keynote begging companies like LG, Samsung, BOE, etc. to start developing the fabs to make the technologies they need. I focus a lot on Micro-OLED for VR, but that is because it’s where I see things going first in terms of displays that leave the smartphone-display philosophy. Meta’s keynote also agrees that’s where it’s going.